How ISVs and startups scale on DigitalOcean Kubernetes: Best practices for DOKS adoption

Our mission at DigitalOcean is simple—to provide you with the tools and infrastructure needed to scale exponentially and accelerate a successful cloud journey. Many ISVs (Independent Software Vendors) and startups including Snipitz, ScraperAPI, Nitropack, Zing, and BrightData have experienced successful scaling and rapid growth on DigitalOcean Kubernetes, and in this post we’ll walk you through their successes and best practices for adopting Kubernetes.

These innovators greatly benefit from adopting DigitalOcean’s Kubernetes platform to efficiently and effectively scale their applications. By leveraging the potential of Kubernetes, ISVs can easily manage containerized applications and automate the deployment, scaling, and management of those applications. Further, this allows ISVs to rapidly scale their services without worrying about the underlying infrastructure, enabling them to focus more on developing their software. In addition, Kubernetes on DigitalOcean provides features such as auto-scaling, load balancing, and self-healing capabilities, which cater directly to the needs of ISVs looking to maintain reliable and high-performance applications for their customers. Further, ISVs can streamline their operations, reduce overhead costs, and seamlessly scale their applications as their business grows.

What makes DigitalOcean Kubernetes stand out

DigitalOcean Kubernetes (DOKS) offers a fully managed, CNCF-compliant Kubernetes service that stands out for its simplicity, affordability, and powerful ecosystem designed to streamline operations beyond initial deployment. DOKS provides an exceptional return on investment for Independent Software Vendors (ISVs), startups, and growing digital businesses through:

-

Simplified user experience: DOKS simplifies the Kubernetes experience, requiring just a single command or one click in the UI to create a cluster. This streamlined approach extends to simple configuration for cluster autoscaler, horizontal pod autoscaler, load balancer, DBaaS and block storage. DOKS provides a production-grade reliability for non-HA clusters through fast control plane repairs, minimizing downtime and technical overhead.

-

Fixed and predictable pricing model: Customers enjoy a transparent and predictable cost structure with DOKS. There are no control plane fees unless you choose a High Availability (HA) setup. Additionally, there are no fees for surge upgrades, which allow for up to 10 extra nodes to be created during upgrades. Importantly, billing only commences once a node becomes an active part of the cluster, not from the moment it is booted, ensuring you pay only for what you use. DigitalOcean Container Registry (DOCR) has fixed-price tiers, and currently no extra charges for egress.

-

Ample egress data Transfer: Each Droplet in DOKS comes with a generous egress data transfer pool, ranging from 500GB to over 5TB per Droplet. This ample bandwidth allocation ensures that the majority of users will never exceed their free bandwidth quota, reducing unexpected costs.

-

Versatile range of virtual machines: Catering to a wide array of workloads, DOKS offers a versatile selection of worker nodes (Droplets). Whether you need shared resources for cost-efficiency or dedicated resources for performance, or if your workload demands CPU and memory optimization, DOKS has options to suit your requirements.

-

Marketplace add-ons for Kubernetes streamline day 2 operations—such as ingress, monitoring, and logging—via DigitalOcean’s Kubernetes 1-clicks. Snapshooter offers backup and restoration for Kubernetes applications. For a managed SaaS experience, users can select from various SaaS add-ons available in the marketplace.

Our customers, finding success in industries ranging from AI & data platforms to web applications, online learning platforms, blockchain, video streaming, gaming, broadcasting, and digital marketing, showcase the versatility and capability of DOKS to support a wide spectrum of services. For instance, Bright Data leverages DOKS for web data indexing at scale, NitroPack accelerates site speeds for CMS-based websites, Atom Learning enhances online education, and Shoppermotion innovates in retail analytics through IoT. These examples highlight how businesses across different industries utilize DOKS to drive efficiency, innovation, and scalability.

“Our load is dynamic, low on weekends and high during the week. We used the DigitalOcean API to create Droplets, put an image on them, and set up our system, but at the end of the day, that’s not as powerful as Kubernetes. We wanted to solve our underutilized Droplets fast, and the only solution that came to mind was DigitalOcean Kubernetes.” - Nir Borenshtein, COO, Bright Data

Discover more about how these ISVs thrive on DOKS by exploring our customer case studies, including architecture diagrams under the Kubernetes section of the DigitalOcean Customer Case Studies portal. In the following sections, we will specifically focus on the enablement path for the ISVs journey.

Challenges in adopting and scaling on Kubernetes

Efficiency is paramount for Independent Software Vendors (ISVs). Many operate with lean development teams and move from discovery to production in just weeks. Kubernetes has become the go-to platform for containerized workloads because of its scalability, portability, and rich ecosystem. It is increasingly common for new products to be Kubernetes-supported from their first release.

Managed Kubernetes services streamline platform management. Many, however, do not handle any day-2 operations. Here’s a closer look at what these platforms typically handle versus what they leave to users:

| Managed responsibilities | User responsibilities |

|---|---|

| Control plane management: Ensures the central orchestration layer is running smoothly. | Application and connectivity monitoring: Overseeing the performance and health of deployed applications. |

| Platform software upgrades: Keeps the Kubernetes version up to date with the latest stable releases. | Observability: Implementing systems to collect, aggregate, and analyze logs, metrics, and traces. |

| Disaster recovery: Restores the control plane and application configurations in case of catastrophic failures | Application backups and disaster recovery: Safeguarding application data against loss or corruption. |

Creating a Kubernetes cluster is straightforward—often just a single command away. Yet, deploying and managing production applications requires considerable additional effort. Regular code updates and varying workload demands (e.g., sudden spikes in streaming services) necessitate careful planning and execution. The challenges typically fall into several key areas:

-

Automation: While initial deployments are simple, maintaining and updating applications can become complex due to unique configurations. Automation is essential for efficiency and consistency. DOKS provides ready-made blueprints and Terraform scripts for automation.

-

Developer Productivity: Traditional deployment methods can hinder productivity. Fast, efficient development cycles are crucial, requiring optimized processes for building and deploying images.

-

Observability: Kubernetes generates a vast amount of logs and metrics. Although it simplifies log and metric collection, a dedicated platform for aggregation and analysis is essential. DOKS provides marketplace 1-clicks for observability (prometheus/grafana/loki stack), 1-click for Kubernetes dashboard. Additionally, Cilium Hubble with flow logs is enabled by default for network troubleshooting.

-

Scale: Applications may require DNS scaling or rapid autoscaling. High pod density on nodes demands optimal performance from the underlying CNI, with common scaling challenges centered around cluster autoscaler, DNS, and CNI performance. DOKS uses a containerized control plane (control plane components run as containers), and therefore is designed to scale rapidly to meet the spike in business and consequent cluster scaling.

-

Troubleshooting: Issues can emerge in various areas, including ingress setup, cluster upgrades, storage connections, and resource allocation. Preparedness and knowledge are key to effective resolution.

-

Disaster preparedness: While the cloud provider secures the control plane, application data and configurations need separate backup and recovery strategies. SnapShooter (part of DigitalOcean) now supports DOKS cluster discovery and backup.

-

Security: Good security practices should be an integral part of the developer and cluster lifecycle.

Fortunately, addressing these challenges primarily requires a one-time investment in automation and planning, except for ongoing troubleshooting efforts.

In the following section and future blog posts, we will explore patterns and best practices derived from our experiences with a diverse range of customers, aiming to navigate these challenges successfully on DigitalOcean.

Best practices checklist for Kubernetes adoption

Developer productivity

Maximizing developer productivity involves streamlining the development and deployment process in Kubernetes environments. This checklist provides targeted recommendations to enhance efficiency and reduce overhead.

Checklist: Adopt the right set of tools to improve productivity

Explore and adopt tools that improve productivity. Some examples include the following, ordered based on usefulness.

-

k9s: This terminal-based UI tool improves cluster management by providing a real-time view of cluster activity and resources, making it easier to monitor and manage applications.

-

stern: Tail multiple pod logs concurrently with stern. It’s invaluable for debugging complex issues that span multiple services.

-

k8sgpt: Leverage AI to help with troubleshooting.

-

kubectx/kubens: Quickly switch between clusters and namespaces, streamlining workflow when managing multiple environments.

-

k8syaml: A tool that helps generate and validate Kubernetes YAML files, ensuring your configurations are correct and ready for deployment.

-

kustomize: Embrace configuration as code by customizing application resources without altering their original manifests, facilitating a more manageable and repeatable deployment process.

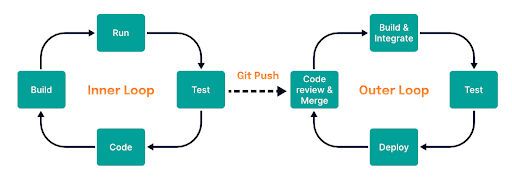

Checklist: Establish Inner Loop Development

Traditional Kubernetes deployment cycles involve multiple steps: containerizing an application, pushing the image to a registry, updating the manifest, and applying it to the cluster. This process can significantly slow down development, especially during rapid iteration phases. The Inner Loop Development approach optimizes the cycle of writing code and observing its effects in a live environment. enabling developers to see feedback immediately on change dramatically increases efficiency.

Some tools for inner loop development include the following.

-

Skaffold: Automates many of the tasks involved in building, pushing, and deploying applications, making it easier to iterate on code changes.

-

Tilt: Focuses on optimizing the development cycle by monitoring file changes and automatically updating the environment in real-time.

-

Telepresence: Creates a bidirectional network bridge between your local development environment and the Kubernetes cluster, allowing for seamless testing and debugging.

-

DevSpace: Offers a streamlined workflow for developing and deploying applications to Kubernetes, including powerful features for building, testing, and debugging directly in the target environment.

Some tools for inner loop development include the following.

-

Skaffold: Automates many of the tasks involved in building, pushing, and deploying applications, making it easier to iterate on code changes.

-

Tilt: Focuses on optimizing the development cycle by monitoring file changes and automatically updating the environment in real-time.

-

Telepresence: Creates a bidirectional network bridge between your local development environment and the Kubernetes cluster, allowing for seamless testing and debugging.

-

DevSpace: Offers a streamlined workflow for developing and deploying applications to Kubernetes, including powerful features for building, testing, and debugging directly in the target environment.

Automation (CI/CD)

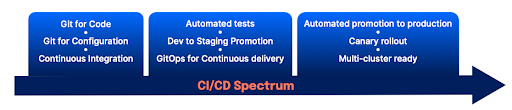

CI/CD readiness is not merely about selecting the right tools; it is a journey that combines DevOps practices and automation to significantly influence success.

The pathway from code to deployment can be divided into four key stages

-

Git Repository Branching Strategy: Choosing the right branching strategy is crucial. For SMBs with smaller teams, GitHub Flow is often ideal due to its simplicity and the pull request (PR) based approach to code commits. This fosters a collaborative and iterative development process, encouraging code review and feedback. Note that there are other branching strategies that are also widely used, for example GitLab Flow, and Trunk based Development.

-

Build Pipeline Execution: Triggered by a PR or on a scheduled basis, this stage involves building the container image from the committed code and pushing the image to your container registry. It’s essential for ensuring that the code is packaged correctly and ready for deployment.

-

Application Manifests Configuration: Once the new image is built, updating the application manifests with the latest image configuration is necessary. This step ensures that the deployment will use the correct version of your application.

-

Rollout Strategy: Deploying the image to the cluster is the final stage of your CI/CD pipeline. Strategies like direct deployment, blue-green deployments, or canary rollouts can be employed based on the risk tolerance and requirements of the project.

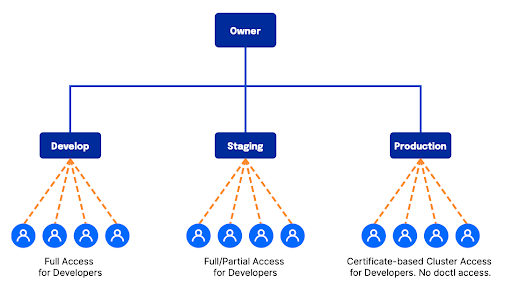

Checklist: Establish a staging environment

Maintaining a 24x7 staging environment in a separate cluster simplifies testing and operations. It allows for thorough vetting of changes in a production-like environment without impacting actual users.

Implement distinct roles for staging versus production environments. Restrict access to production to minimize risks and enforce stricter controls over changes.

Checklist: Use GitOps for deployment

Utilize your CI pipeline (e.g., GitHub Actions) or tools like Kaniko for building container images. Automate deployments to staging to facilitate continuous testing and validation.

For production deployment, you should start with manual approval and then progress to continuous deployment.

-

Use manual approvals for Production. Although automation streamlines operations, incorporating manual approvals into your production deployment process adds a layer of quality assurance. This extra step helps in maintaining the stability and quality of your production environment.

-

Manual Approvals for Production: Continuous Deployment: Once you’ve established robust testing procedures and automation, consider moving to a continuous deployment model. This approach enables seamless transitions from development to production, reducing time-to-market for new features and fixes.

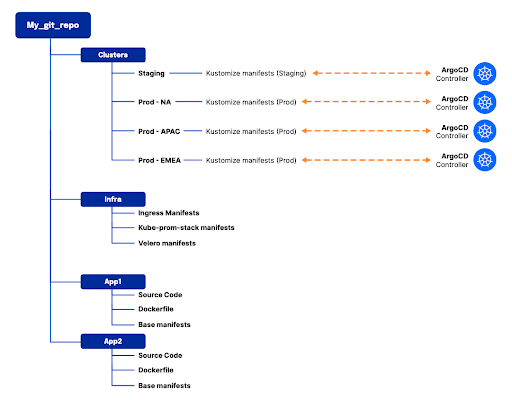

Adopting GitOps practices, where the cluster configuration is kept in sync with a Git repository, offers a robust method for managing deployments. Tools like ArgoCD and Flux automate the synchronization, providing a clear audit trail and simplifying rollback procedures if needed.

The following diagram shows an illustrative example of deploying code to multiple environments using ArgoCD. Changes are first applied to the staging environment for testing. Once approved, the same changes are promoted to the production environment, maintaining consistency and reliability across deployments.

Next steps

As we continue to explore the ISV journey of Kubernetes adoption, our upcoming blog series will delve deeper into resilience, efficiency, and security of your deployments.

-

Observability (Part 2): Unpack the tools and strategies for gaining insights into your applications and infrastructure, ensuring you can monitor performance and troubleshoot issues effectively.

-

Reliability and scale (Part 3): Explore how to manage zero-downtime deployments, readiness/liveness probes, application scaling, DNS, and CNI to maintain optimal performance under varying loads.

-

Disaster preparedness (Part 4): Discuss the importance of having a solid disaster recovery plan, including backup strategies, practices and regular drills to ensure business continuity.

-

Security (Part 5): Delve into securing your Kubernetes environment, covering best practices for network policies, access controls, and securing application workloads.

Each of these topics is crucial for navigating the complexities of Kubernetes, enhancing your infrastructure’s resilience, scalability, and security. Stay tuned for insights to help empower your Kubernetes journey.

Ready to embark on a transformative journey and get the most from Kubernetes on DigitalOcean? Sign up for DigitalOcean Kubernetes start here.

Related Articles

Top 10 Reasons to Choose DigitalOcean’s Managed Kafka Solution

Faye Hutsell

April 23, 2024•4 min read

Accelerate Your Business with DigitalOcean App Platform

Bikram Gupta and mfranco

April 1, 2024•5 min read