Cloud Performance Analysis Report

Prepared for DigitalOcean by Cloud Spectator LLC

Executive Summary

DigitalOcean commissioned Cloud Spectator to evaluate the performance of virtual machines (VMs) from three different Cloud Service Providers (CSPs): Amazon Web Services, Google Compute Engine and DigitalOcean. Cloud Spectator tested the various VMs to evaluate the CPU performance, Random Access Memory (RAM) and storage read and write performance of each provider’s VMs. The purpose of the study was to understand Cloud service performance among major Cloud providers with similarly-sized VMs using a standardized, repeatable testing methodology. Based on the analysis, DigitalOcean’s VM performance was superior in nearly all measured VM performance dimensions, and DigitalOcean provides some of the most compelling performance per dollar available in the industry.

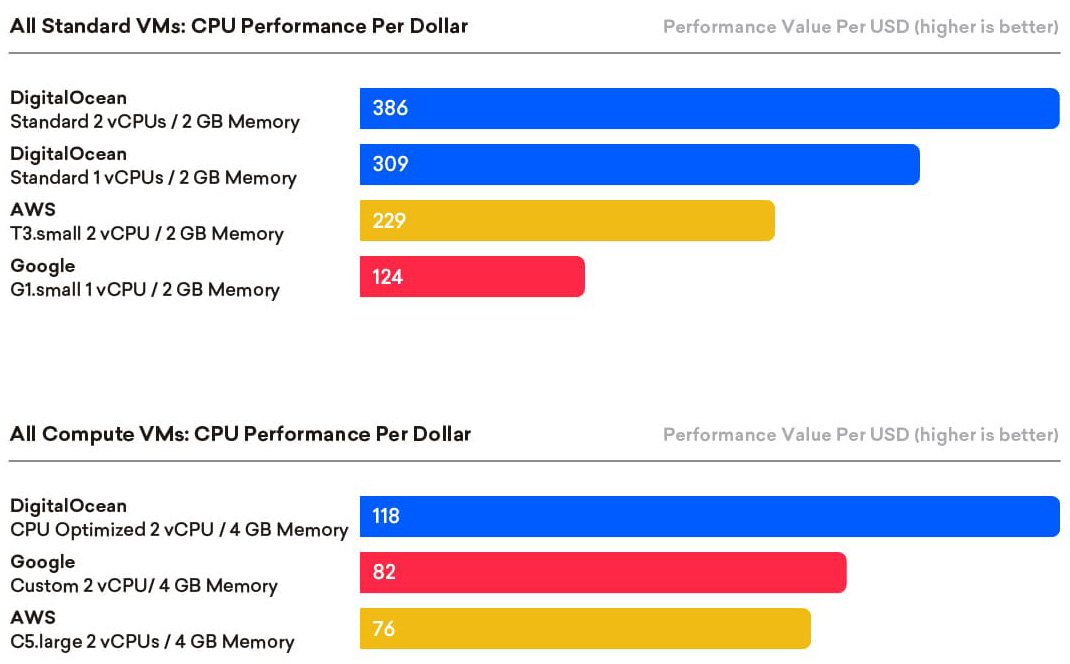

Figure 1 - CPU Performance Per Dollar of all tested VMs

The primary drivers for DigitalOcean’s strong performance were three factors:

- DigitalOcean’s competitive pricing model

- DigitalOcean’s excellent CPU and memory scores

- DigitalOcean’s exceptional random read performance and best in class random write scores.

Key findings and observations from this analysis are highlighted below, with more detailed analysis following in the body of the report.

Key Findings Observed in This Report

The following summary findings were gleaned from the testing performed by Cloud Spectator during this engagement:

vCPU and Memory Performance

Compute and memory performance were tested using the GeekBench4 test suite. The following highlights emerged from these tests:

- DigitalOcean’s basic VMs outperformed rival offerings. DigitalOcean’s basic 2 vCPU 2GB Droplet surpassed Amazon’s recently-released T3.small with unlimited “burst,” and DigitalOcean’s 1 vCPU 2GB Droplet outperformed the burstable Google g1-small VM.

- Within the compute-optimized class, DigitalOcean’s 2 vCPU 4GB model demonstrated competitive performance with the more costly AWS C5.large VM.

- Both DigitalOcean’s and AWS’s compute-optimized VMs surpassed GCE’s custom Skylake machine by over 20%.

Storage Speed

Storage performance was tested using the FIO tool to perform a variety of tests. The 4K random read and random write results are summarized below.

- DigitalOcean VMs posted remarkable 4K read speeds, achieving performance of 100-200x greater than rival offerings regardless of type or vCPU count.

- For 4K random write performance, DigitalOcean also posted the best scores, although raw results were not as dominant as the read testing.

Price-Performance

Price-Performance, or the value of a given Cloud service, derived by dividing performance by price (compute/memory and storage), is summarized below:

- DigitalOcean provided excellent price-performance value across the board, which is a result of its lower prices combined with excellent performance.

- DigitalOcean’s basic VM sizes were able to achieve the highest price-performance values, not only within their category or class, but across all provider’s respective VM types.

- DigitalOcean’s compute-optimized VM achieved the highest price-performance value within the compute-optimized class by a large margin.

The details of the testing setup, design and methodology along with full results, are explained in the body of the report.

Introduction

DigitalOcean commissioned Cloud Spectator to assess the performance of virtual machines (VMs) from three different Cloud Service Providers (CSP) or providers: DigitalOcean (DO), Amazon Web Services (AWS), and Google Compute Engine (GCE). Cloud Spectator tested the various VMs from these providers to evaluate the CPU performance, RAM and storage for each provider’s VMs. The purpose of the study was to understand the VM performance between Cloud providers with similarly-sized and classed VMs using standardized and repeatable testing methodology. Performance information for specified basic 1 and 2 vCPU 2GB and compute-optimized 2 vCPU 4GB VMs was gathered using Geekbench4 and FIO benchmarking tools for this analysis. Each VM type was provisioned with four duplicate VMs to limit sampling error. Data was then collected during 100 iteration tests.

This project focused on comparison of performance data for CPU, RAM and storage IOPs for random read and write. The CPU-Memory composite scores and storage scores were evaluated on their own, and then were used to calculate the price-performance value for all provider VM offerings. The price-performance value for each VM was calculated by dividing performance averages by monthly cost in USD, with separate scores calculated for storage read and write. This simple price-performance formula allows the universal comparison of VMs offered by the respective Cloud Service Providers included in this analysis.

Using this proven Cloud sampling and testing methodology, Cloud Spectator evaluated the Cloud services based on price-performance calculations, while detailing specific strengths and weakness of each provider’s VMs based on the objective performance results. Given the inherent variability of Cloud services, these methods are necessary to provide reliable and comparable analyses of Cloud-based infrastructure-as-aService (IaaS) offerings.

VM Specs and Selection Methodology

Virtual machines (VMs) for this engagement focused on 1 and 2 vCPU VMs. They were grouped and classified as basic 1 vCPU 2GB, basic 2 vCPU 2GB and compute-optimized 2 vCPU 4GB machines. All instances were deployed with a current release of Ubuntu 18.04 from the respective providers. Local SSDs were used for DigitalOcean’s Droplet VMs, while elastic block storage (EBS) general purpose (gp2) block storage was used for AWS, and persistent solid state (SSD) block storage was used with GCE. Basic VMs are targeted for general purpose workloads or application testing, and are configured with 1:2 and 1:1 RAM to vCPU ratios for Basic 1 vCPU and 2 vCPU instances, respectively. Basic instances are configured to share cores with other VMs. Some may “burst” temporarily (AWS T3.small), while others opportunistically use available resources on the parent server, e.g. GCE g1-small, for performance boosts when needed for a particular workload. On the other hand, compute-optimized VMs are configured with dedicated vCPU’s operating at full capacity with a 1:2 vCPU to RAM ratio for more demanding workloads. The VMs selected for this engagement are listed in the tables below:

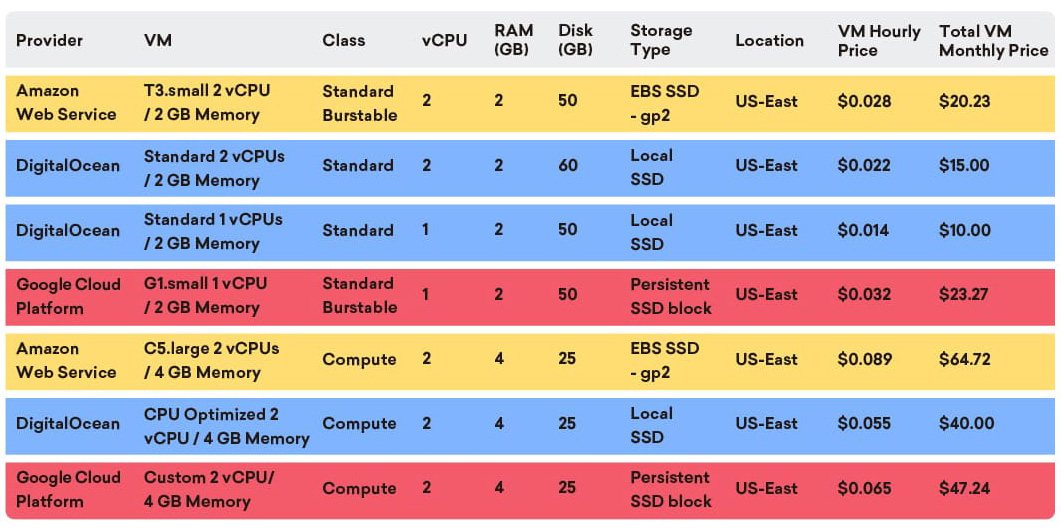

Figure 6.1 - DigitalOcean, AWS and GCP VMs tested and compared

The test design and methodology used in this analysis are described in the following sections.

Test Design and Methodology

The test design and methodology are described below for each of the VM performance dimensions evaluated, as follows: CPU and RAM, and storage random read/write. Synthetic testing was performed on the selected VMs to enable objective comparisons of performance.

Synthetic Testing: CPU & Memory:

CPU and memory testing were conducted with the Geekbench4 benchmarking suite, which allows modern testing scenarios such as floating-point computations, encryption and decryption, as well as image encoding, life-science algorithms and other use cases.

Synthetic Testing: Storage:

Storage results were obtained using FIO (Flexible I/O tester) using 4KB blocks and threads corresponding to vCPU count. Several thousand 60-second random iterations were conducted to compensate for the high variability often seen when stressing storage volumes. Results were gathered and represented in IOPs (input/output operations per second).

Test Design Considerations:

Testing was conducted on specific VM types for each provider. Provider VM configurations may yield different results based on underlying infrastructure, virtualization technology and settings (e.g. shared resources), and other factors. Furthermore, factors such as user contention or physical hardware malfunctions can also cause suboptimal performance. Cloud Spectator therefore provisioned multiple VMs with the same configuration to better sample the underlying hardware and enabling technology, as well as improve testing accuracy and limit the effects of underlying environmental variables.

The VMs selected for this engagement were generally-available specified offerings from the various providers. While better performance can often be attained from providers when additional features or support services are purchased, the selected VMs used in Cloud Spectator’s testing do not leverage such value-added services. This helps provide data and test results that are indicative of real-world customer choices from the tested CSPs.

Error Minimizing Considerations

Four duplicate VMs were deployed during testing to minimize sources of error prevalent in a Cloud hosting environment. The most notable challenge is the Noisy Neighbor Effect. Testing duplicate VMs mitigates most non-specific errors that could be attributed to a singular parent instance or storage volume. By minimizing possible sources of error, more accurate and precise performance samples can be collected during testing.

Performance Summary

In the sections below, the performance results from Cloud Spectator’s testing is presented. Graphs of the performance results, along with interpretive narratives, are provided for all tests.

Price-Performance Ratio

Price-performance, also known as value, compares the performance of a Cloud service to the price of that service. Thus, price-performance offers a universal metric for comparing Cloud service value. Priceperformance is calculated from the average Geekbench4 multi-core score divided by the monthly price in US Dollars (USD). A higher price-performance score indicates greater value for a given VM configuration.

Generally, Basic, burstable or smaller VMs tend to achieve higher price-performance values than compute-optimized or larger VMs with static vCPU allocations. Larger VMs with specifically provisioned resources are typically used for more large production use cases, and therefore have higher prices. Thus, this evaluation was limited to comparable size categories to illustrate the differences between these types of machines.

CPU Performance

Single-core vs Multi-core Preface

Multi-core CPU test averages tend to display large differences based on vCPU count, as opposed to singlecore tests. The results section below provides an overview of the single-core performance, while multi-core performance is used for price-performance analysis.

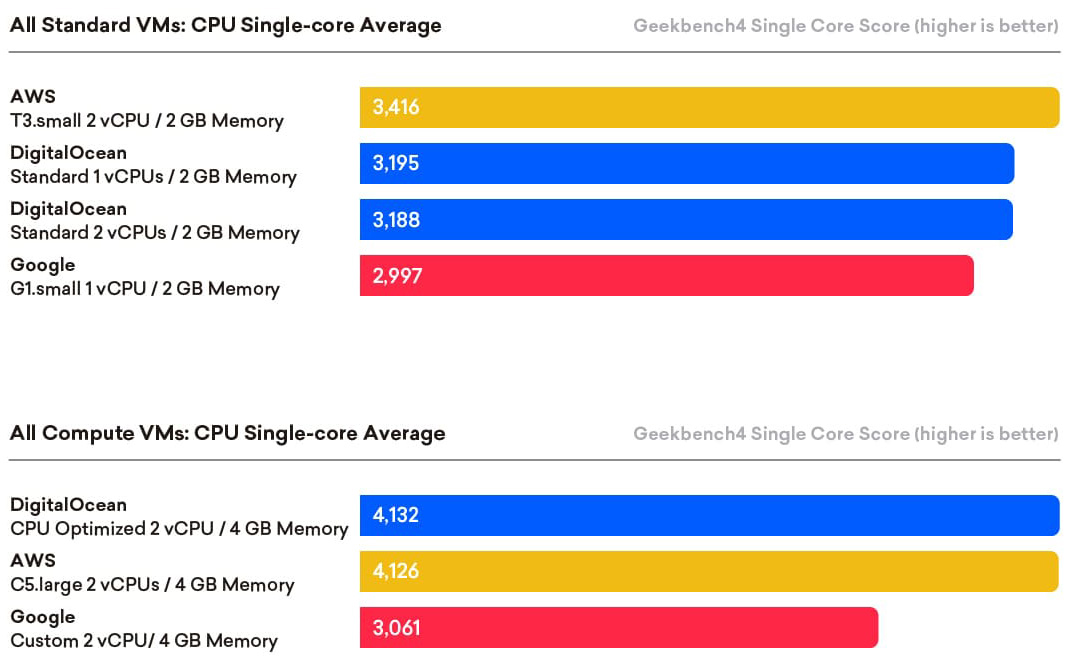

CPU Performance for all VMs

The chart below depicts the CPU single-core performance of all VMs evaluated in this study. The GeekBench4 single-core score represents the processing speed of one CPU (or core) processing a single stream of instructions rather than multiple parallel streams per core. Many consumer applications, although they are multithreaded, rarely utilize more than one CPU thread at a time. Thus, the single-core CPU test is a reasonable real world test for typical consumer workloads.

Figure 9.1 - Single-core Averages

In most production scenarios, all vCPUs will be utilized in multi-core VMs if a workload or application is able to exploit them. Though not discussed in detail, single-core scores provide a universal performance baseline that is useful when comparing multi-core performance.

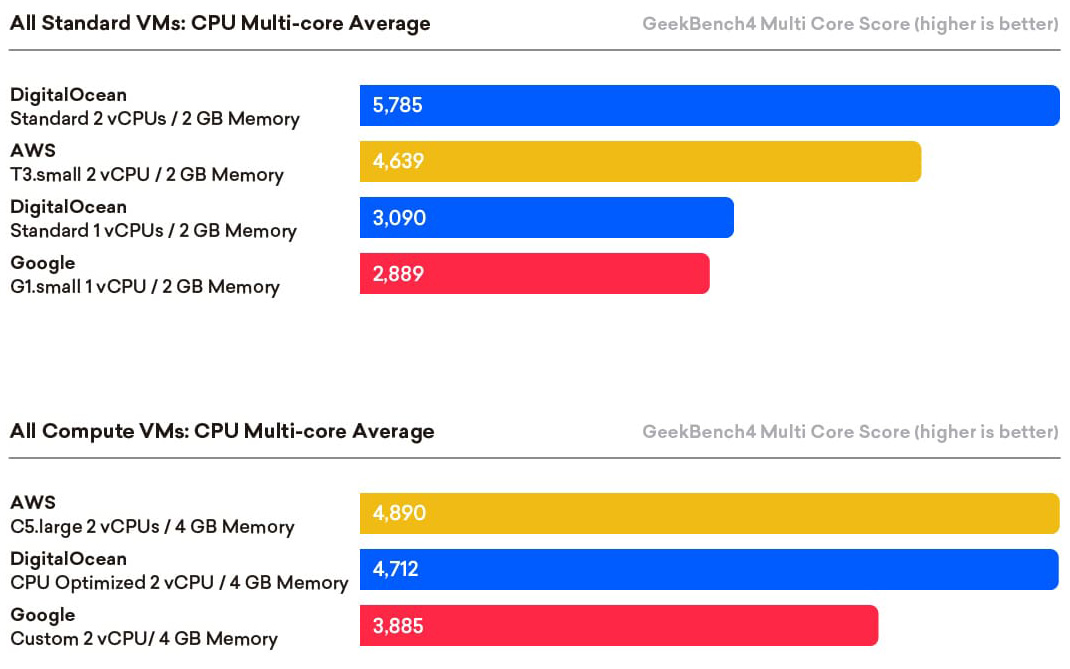

CPU Multi-core Overview

The chart below depicts the CPU multi-core performance of all VMs covered in this study. As core count, or vCPU quantity, increases VMs generally produce higher scores as depicted in the graph below. Computeoptimized machines are shown below with solid colored bars, while basic VMs are displayed with pattern filled bars.

Figure 10.1 - Multi-core Average

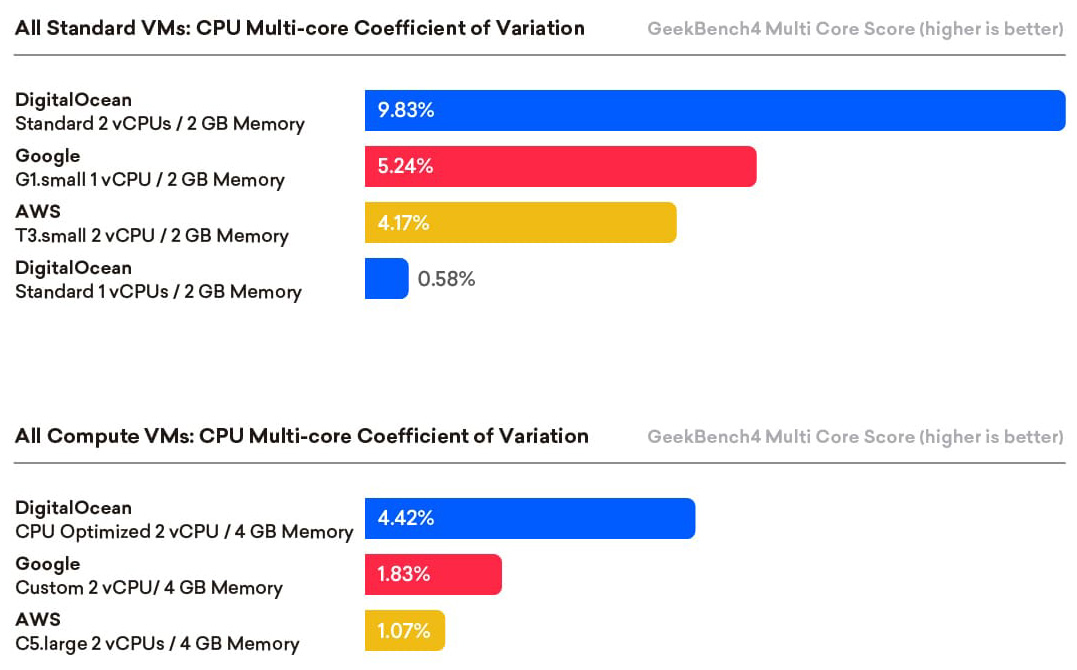

Figure 10.2 - Multi-core Coefficient of Variation

- DigitalOcean’s Basic 2CPU 2GB instance displayed excellent scores, handily outperforming other offerings, including AWS’s newly-released T3.small with unlimited CPU burst capability. This speed, however, was accompanied by approximately double the performance variation. This variation, however, remains well within expectations for the Cloud servers due to affects from noisy neighbors or shared hosting infrastructure.

- AWS’s standard burstable T3.small and compute-optimized C5.large VMs provided comparable scores to DigitalOcean’s compute-optimized 2CPU 4GB VM, while GCE’s custom 2CPU 4GB Skylake lagged behind by a 25% margin.

- Among the smallest Basic offerings, DigitalOcean’s 1CPU 2GB instance was found to have impressively low variation while outperforming GCE’s opportunistic g1-small VM.

*AWS unlimiting bursting may incur additional charges.

In the next section, the analysis will focus on Cloud storage performance.

Storage Performance

Storage performance results are summarized in the sections below. The testing methodology for storage ensured that all machines were tested for several thousand iterations using FIO with a block size of 4KB, queue depth of 32 running at 1 thread per vCPU for random read and write.

Storage Performance for ALL VMs (Read)

Below, storage random read results for all providers and VM types are displayed. Summary details are provided below. The chart displays compute-optimized VMs as solid colors while Basic VMs are pattern filled.

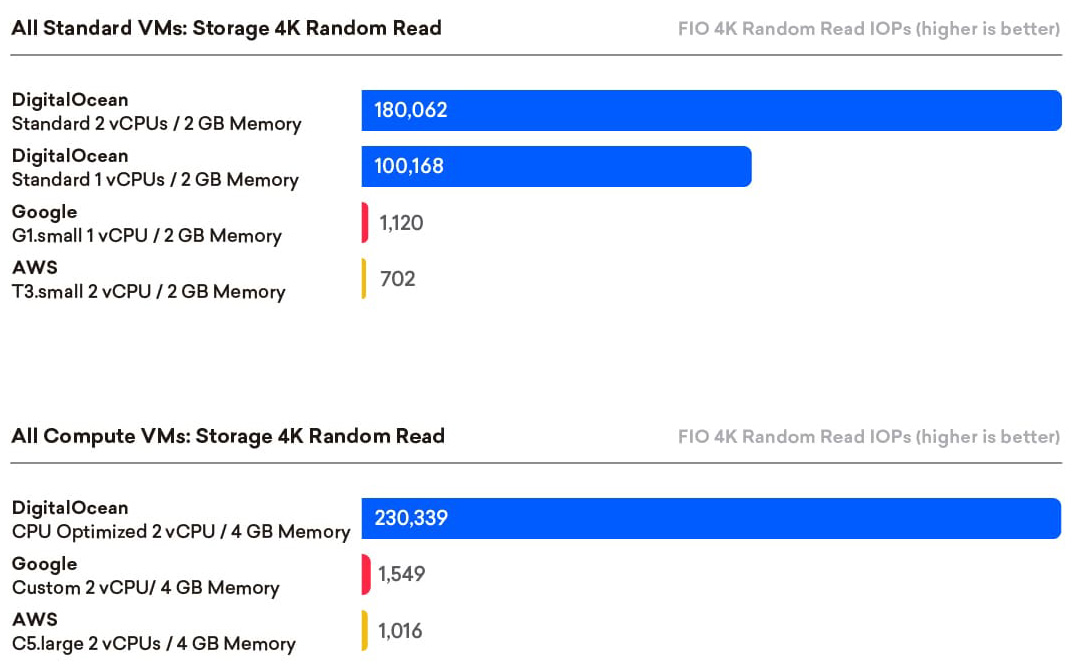

Figure 11.1 - Storage 4K Read IOPs

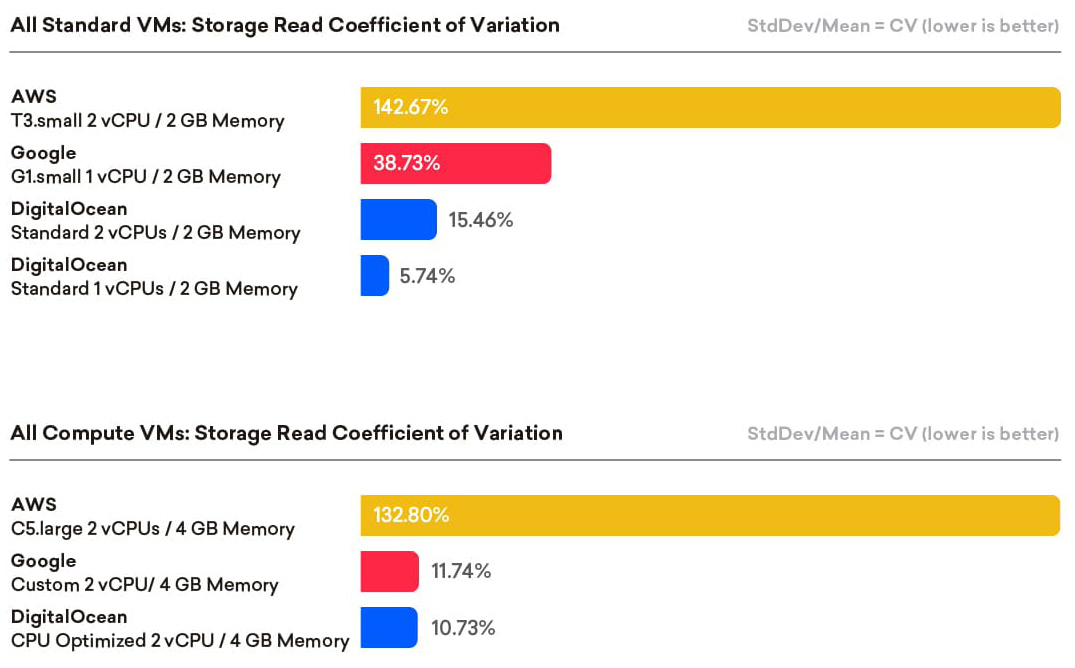

Figure 11.2 - Storage Read Coefficient of Variation

- DigitalOcean provided phenomenal read speeds, surpassing all competing VM types by a factor of 65X. The high IOPs performance was achieved with above average performance stability (low variability). DigitalOcean’s compute-optimized 2CPU 4GB instance achieved a maximum average of 230,000 IOPs, while the smallest 1 vCPU instance still managed an impressive 100,000 IOPs.

- GCE’s VM offerings beat out both AWS VMs, although both CSP’s maximum scores are significantly lower compared to those achieved by DigitalOcean for this test.

- AWS, utilizing EBS volumes with burst, show high variability with CVs exceeding 130%, while GCE and DigitalOcean showed similar variation.

VM size, as described by vCPU count alone has some role in storage random read performance in this test though RAM has a much greater affect as can be seen from the results above with 2 vCPU 2GB instances vs compute-optimized 2CPU 4GB VMs from the same providers. Below, random write results are presented.

Storage Performance for ALL VMs (Write)

Unlike random read performance, random write performance requires numerous background tasks between operations. This includes data allocation and redundancy checking across the large storage arrays typically used in Cloud-base block storage. VM vCPU counts are less important factors in random write performance than vendor storage types. Below, storage write performance are presented. The data compares all VM sizes and confirms that vCPU core count is a less relevant factor for storage performance than CPU-Memory testing. Summary observations of these test results can be found below the charts.

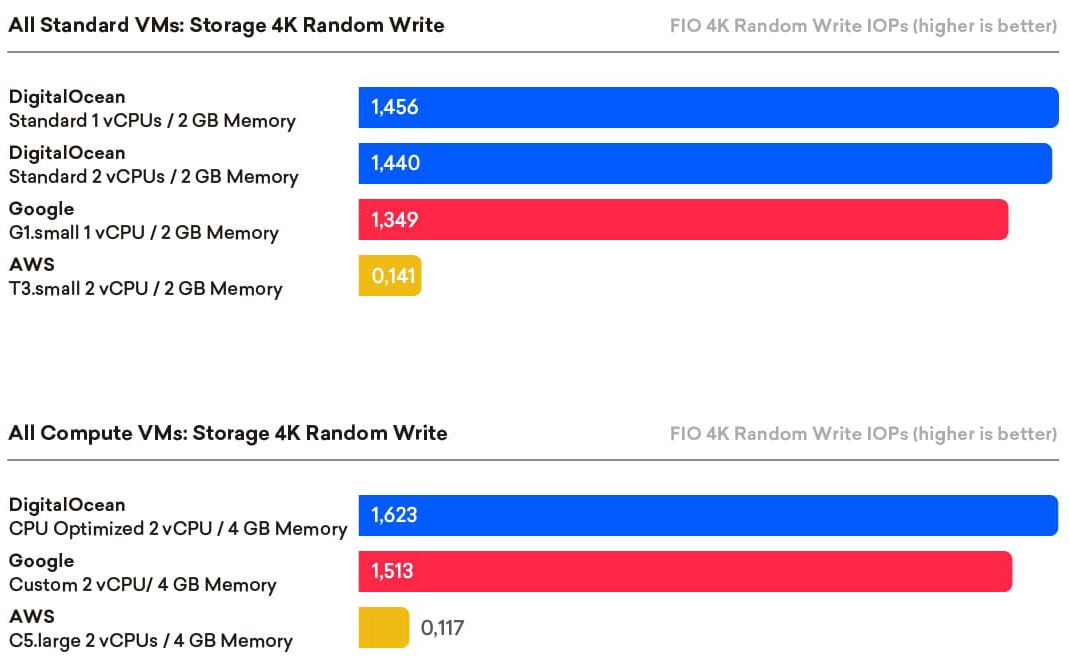

Figure 12.1 - 4K Write IOPs

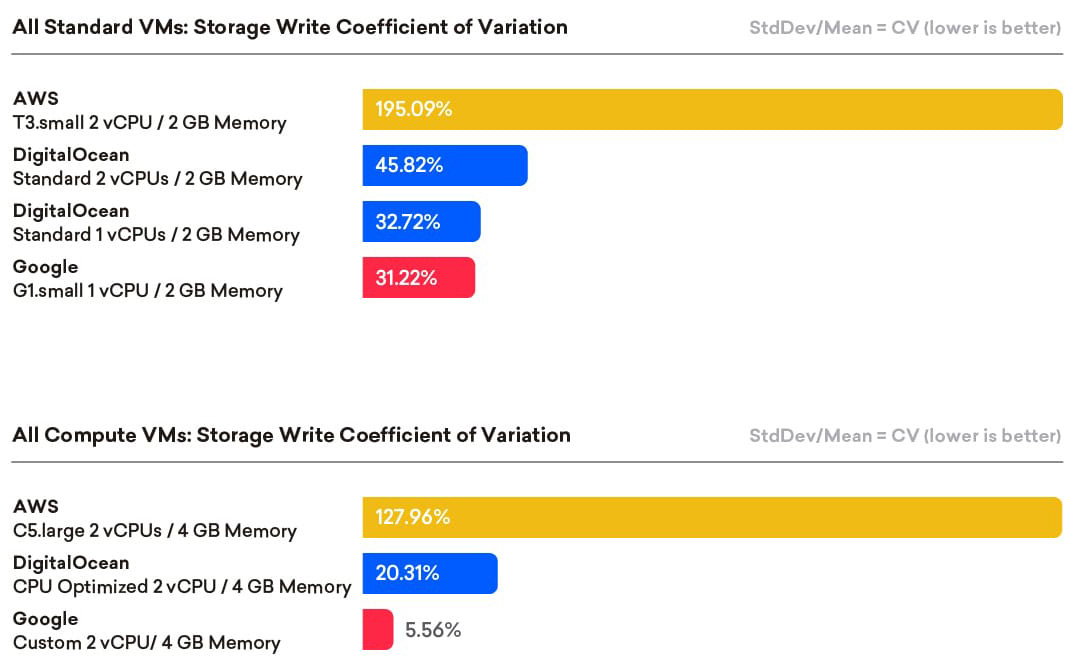

Figure 12.2 - 4K Write Coefficient of Variation

- DigitalOcean VMs scored well across the board and highest within their respective classes.

- DigitalOceans compute-optimized 2CPU 4GB VM outperformed the GCE Sklyake instance by a modest margin, with DigitalOcean Basic 1 and 2 vCPUs scores narrowly behind.

- GCE’s VM write speed is on par with DigitalOcean’s, exhibiting only slightly lower performance variability throughout testing.

- GCE also showed great IOPs consistency regardless of read or write, which is unusual among the tested offerings from all CSPs.

To summarize, DigitalOcean performed well for random write performance, while dominating random read tests. Google provided consistency for both read and write operations, while AWS displayed significantly lower write speeds compared to read along with universally high storage variation due to EBS burst.

VM CPU Performance Per USD (Price-Performance, or Value)

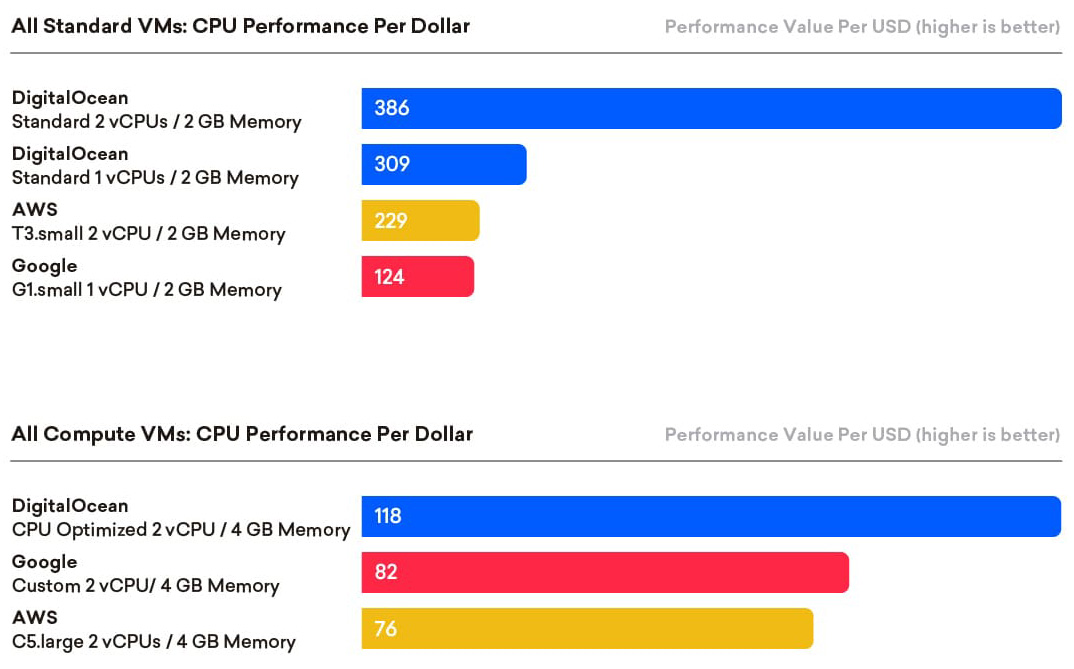

This section focuses on the compute and memory price-performance, or value. The values shown are linear and unweighted, using the multi-core performance scores (figures 10.1) and monthly price (tables 6.1 - 6.3). Higher scores indicate higher value for each USD spent by the tested VMs.

CPU Price-Performance ALL VMs

The chart below summarizes all VM sizes evaluated for price-performance. Compute-optimized VMs are found in solid colors, while Basic VMs are shown with a pattern fill.

Figure 14.1 - CPU Price-Performance

- DigitalOcean achieved the highest price-performance ratios for compute-optimized VMs, as well as for both sizes of Basic VMs.

- DigitalOcean’s lower prices, coupled with higher CPU performance, make them prime general use VMs where performance requirements may be variable or unknown.

- In general, as VM sizes increase, price-performance trends down. The exceptions are DigitalOcean’s 2 vCPU Basic VM with notably high CPU performance, and Amazon’s T3.small, though this machine may incur substantial fees if used for CPU intensive workloads. That said, the increased RAM of compute-optimized machines from these providers may be required for use cases such as database operations or moderately busy multi-role Web servers.

- Overall, standard VM’s showed greater price-performance value across all providers and sizes.

Figure 13.2 - VM Total Monthly Price

In summary, DigitalOcean VMs provide excellent value across all sizes and both VM groups (Basic and Compute-Optimized), with Basic class machines having a price-performance advantage over the computeoptimized machines. With the lowest prices and competitive CPU scores, DigitalOcean achieved superior performance scores, even against some of the newest hyperscale offerings. The following section details storage price-performance.

Storage Performance Per USD (Price-Performance, or Value)

This section focuses on storage price-performance value. Storage performance can be a major bottleneck for certain applications, and often storage pricing can become a budgeting challenge for enterprises. The values shown in the sections below are linear and unweighted, using the average performance scores for random read (Figure 11.1) and random write (Figure 12.1), as well as monthly prices (tables 6.1-6.3). Higher scores indicate a better price-performance value per USD spent compared to competing VMs in the same size category.

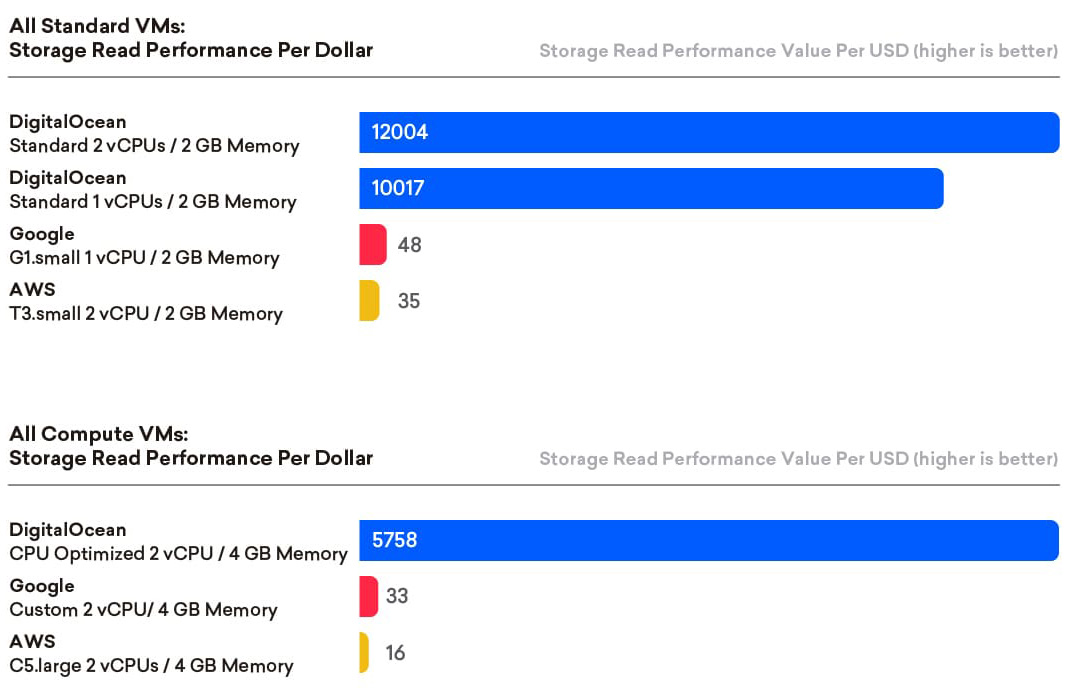

Price-Performance ALL VMs (Read)

In the chart below, price-performance is compared for the specified VMs based on random read speed. This is most useful when considering read-intensive use-cases. Summary observations are provided below.

Figure 14.1 - Read Price-Performance

- DigitalOcean’s VMs demonstrated the highest price-performance read values across the board, outperforming rival providers in every size and class.

- DigitalOcean was superior in read price-performance value for two primary reasons:

undefinedundefined - Google’s persistent SSD Block storage displayed read scores slightly exceeding those of AWS’s EBS volumes, although both provider’s scores were lackluster compared to DigitalOcean’s local SSDs.

Overall, DigitalOcean’s offerings were dominant in read speeds, as their local storage provided a significant edge over the block storage offered by other tested providers.

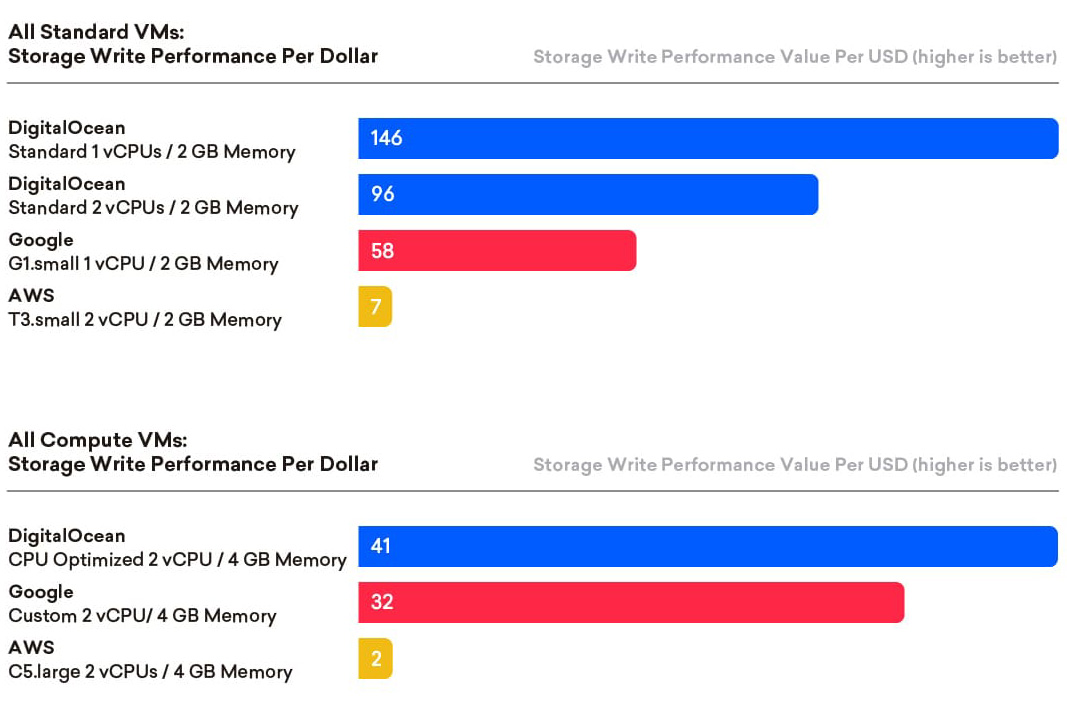

Price-Performance ALL VMs (Write)

Write speed, no matter the drive type or configuration, has always been a technology challenge. The following summary observations were gleaned from the analysis. Compute-optimized VMs are displayed in solid colors, while the Basic VMs are shown in pattern fills.

Figure 15.1 - Write Price-Performance

- DigitalOcean’s Basic 1 vCPU and Basic 2 vCPU VMs delivered the highest price-performance value, followed by GCE’s burstable g1-small instance.

- The remaining DigitalOcean compute-optimized 2 vCPU instance offers the highest price-performance in its class, followed by GCE’s custom Skylake machine.

- DigitalOcean’s high price-performance scores are due to a combination of achieving the fastest write speeds in the respective classes coupled with substantially lower monthly pricing, particularly within the Basic class.

- AWS write scores lagged considerably behind rivals, although the T3.small has a low base price. Both the AWS T3.small and the more costly C5.large exhibited lower price-performance results.

In summary, DigitalOcean provides excellent storage price-performance for both read and write for all VM configurations tested, regardless of machine classification or size.

Conclusion

For this engagement, Cloud Spectator tested Amazon Web Services, Google Compute Engine and DigitalOcean VMs head to head across three categories: Basic 1vCPU 2GB RAM, Basic 2vCPU 2GB RAM and compute-optimized 2vCPU 4GB RAM. The testing and data collection were performed by running exhaustive computational and storage benchmarks on specified VM configurations. From these results, priceperformance values for each VM type were determined.

DigitalOcean’s Droplets displayed excellent performance values in all areas. Their CPU-Memory performance, particularly within the Basic class, achieved performance values exceeding those of comparable offerings by a factor of three. Additionally, DigitalOcean’s read speed was vastly superior across all VM types. Storage write price-performance value was less dominant than read results. However, DigitalOcean’s priceperformance value remains excellent, with all of its tested VMs leading in the respective categories.

Raw performance testing of CPU and memory demonstrated that DigitalOcean’s Basic 2CPU 2GB offering is a stand out configuration, even when compared with the T3.small, Amazon’s most recent series released on August 21st, 2018. In the 2 vCPU compute-optimized category, Amazon’s current VM, the C5.large, provided competitive results, although at 38% higher cost. Both AWS and DigitalOcean offerings surpassed Google’s custom Skylake VM by a margin greater than 20%. While DigitalOcean’s 1vCPU machine was the leading VM, it was challenged by the opportunistic, burstable GCE g1-small in performance. However, the DigitalOcean VM exhibits far less performance variability, which makes it an advantageous choice for small workloads with known requirements.

Based on Cloud Spectator’s testing, DigitalOcean’s local SSD raw read performance was exceptional as compared to Amazon’s EBS and Google’s Persistent SSD Block storage. Raw write performance results were also very good, slightly exceeding Google’s offerings. However, DigitalOcean achieved the highest scores across all categories.

While Google provided consistent performance for both read and write operations, Amazon’s EBS performance was approximately 10% of rival offerings during write tests. As noted, Amazon’s Elastic Block Storage has a “burst” feature, which was demonstrated in the pointedly high variation seen in the test results. The high-performance variability can be remedied by purchasing larger EBS volumes with higher base IOPs and longer burst periods. Higher capacity EBS volumes, however, will add substantially to the price of small machines.

The Cloud Spectator analysis reveals DigitalOcean is competitive with the hyperscale providers such Amazon Web Services and Google Compute Engine. DigitalOcean combines an aggressive pricing strategy with outstanding storage performance and excellent computational performance. This positions DigitalOcean as a world class Cloud Service Provider. DigitalOcean offers machines for a variety of use cases, meeting requirements that span individual end-users, developers as well as large-scale enterprises.

Talk with a solutions engineer

Have questions about migrating from another cloud provider or what your total costs might end up looking like on DigitalOcean once you start scaling? You can schedule a meeting with our team of experts who can help answer any questions you have before you get started.